Inside the Launch of NVIDIA DGX Spark: What The Smallest AI Supercomputer Means for Enterprise

NVIDIA’s DGX Spark is shaping up as the most compact true AI supercomputer yet — here’s what enterprises must know about its architecture, value proposition and deployment implications.

Introduction

The AI‐infrastructure landscape continues to evolve at a breakneck pace. Recently, NVIDIA released the DGX Spark — positioned as the “smallest AI supercomputer” designed specifically for enterprise deployments. While the term “supercomputer” often evokes room-sized systems in national labs, DGX Spark signals a shift: the supercomputing power of today is becoming accessible to enterprise environments in modular, scalable form.

In this article we’ll go deep into what DGX Spark brings to the table, how the architecture differs from previous systems, what enterprise leaders should evaluate when deploying such infrastructure, and what the deployment of “smaller” doesn’t necessarily mean “less capable.”

What is DGX Spark?

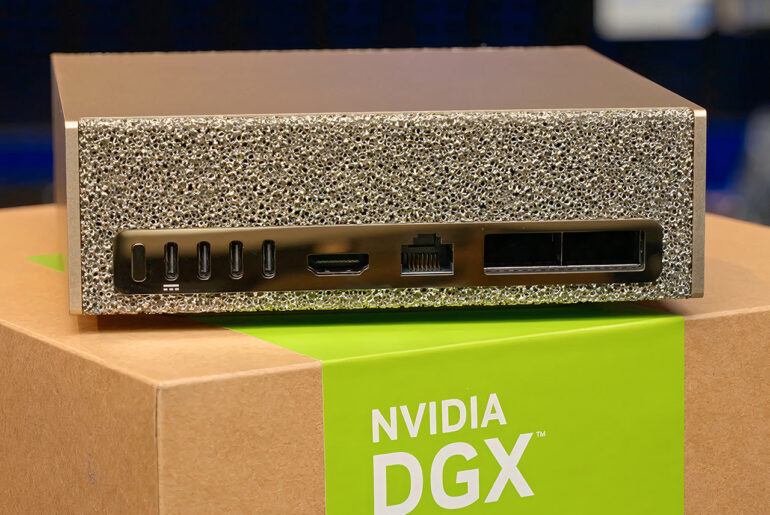

DGS Spark is described by NVIDIA as a compact AI infrastructure system that includes high-density GPU modules, optimized cooling and power systems, integrated networking and software stack tailored for AI model training, inference and fine-tuning. The key promise: deliver “supercomputer”-level performance in an enterprise-friendly footprint.

From an enterprise architecture perspective, this means: shorter deployment lead times, lower facility upgrade overhead, and closer proximity to the business environment. Instead of shipping petabyte-scale model training out to remote data centers, enterprises can potentially house high-performance AI infrastructure on-premises or in regional edge locations.

Key Architectural Innovations

Here are the standout architectural features of DGX Spark worth noting:

- High-density GPU module stack: The system incorporates multiple next-generation GPUs optimized for large-scale model training and multi-modality inference.

- Integrated networking and I/O fabric: Latency and bandwidth bottlenecks historically limited scaling of AI systems — DGX Spark addresses this with an optimized interconnect, enabling distributed compute across nodes with minimal overhead.

- Thermal and power optimization: Unlike many “lab-scale” systems that require industrial-grade power and cooling, DGX Spark is designed for enterprise racks, lowering the barrier for deployment in standard data-center spaces.

- Pre-integrated AI software stack: The system ships with optimized drivers, model training frameworks, orchestration tools and monitoring dashboards — reducing “integration tax” for enterprises who would otherwise build the stack themselves.

These innovations reduce friction: less waiting for infrastructure, less custom wiring, and a more predictable path from “box arrival” to “model deployment.” That means enterprises can focus on use-cases and outcomes rather than hardware plumbing.

Why “Smallest Supercomputer” Matters

The descriptor “smallest AI supercomputer” is not just marketing. It reflects three meaningful shifts:

- Accessibility of compute scale: Historically, supercomputing resources were the domain of large cloud providers or national labs. Now, enterprises of many sizes can harness considerable AI compute closer to their operations.

- Proximity to data and decision‐making: Keeping infrastructure near the data source matters for latency-sensitive workloads, data governance, and compliance contexts. A smaller supercomputer means you can place compute where business operations occur.

- Faster iteration cycles: When infrastructure is purpose-designed, integrated and accessible, innovation velocity increases. Enterprises don’t need to schedule distant clusters — they can iterate locally and frequently.

For enterprises moving from experimentation to production, these shifts matter a great deal.

Business Use-Cases and Deployment Scenarios

Here are several enterprise use-cases where DGX Spark makes a compelling case:

- Generative model fine-tuning and inference: Enterprises customizing large language or multimodal models often face latency or bandwidth constraints when relying on remote cloud infrastructure. DGX Spark can localize that work.

- Edge AI for regulated sectors: Sectors such as finance, healthcare, manufacturing, and defense often have strict data sovereignty or latency needs. A compact supercomputer supports on-premises AI while meeting compliance demands.

- Hybrid training + inference platforms: Some enterprises adopt a hybrid model: training in cloud at scale, inference on-prem. With DGX Spark, you can bring both training fine-tuning and inference closer to the edge.

- Research and innovation labs inside enterprises: Organizations with internal AI research teams can deploy DGX Spark to support rapid prototyping, model experimentation and analytics without needing cloud tenancy or sharing resources externally.

In short, the system is not just for “big tech” — it enables midsize enterprises to bring frontier AI capabilities in-house.

Important Considerations Before Deployment

Despite the compelling value proposition, enterprises should evaluate carefully before making large infrastructure commitments:

- Power & cooling readiness: Even though optimized, high-density compute still draws significant power and generates heat. Facility readiness remains critical.

- Data maturity: The best hardware won’t deliver value if the data pipelines, labeling, feature stores and governance are weak. Infrastructure amplifies capability, but doesn’t create it from scratch.

- Model lifecycle management: It’s one thing to spin up a “supercomputer” — it’s another to operate, monitor, manage drift, and update production models. Operational maturity must accompany hardware investment.

- Cost versus cloud scaling: For some workloads, leveraging cloud or managed AI infrastructure may still be more cost-effective. Enterprises should compute total cost of ownership across on-prem, edge, and cloud options rather than assuming “owning compute = best always.”

- Vendor lock-in and agility: Deploying systems like DGX Spark may introduce dependencies on specific hardware, networking or software stacks. Enterprises must design for future flexibility and hybrid model support.

By treating the investment as part of a broader AI infrastructure strategy rather than a one-time hardware purchase, organizations will navigate these considerations effectively.

Comparing On-Prem vs Cloud AI Infrastructure

Let’s compare three deployment models in context of DGX Spark’s value proposition:

| Model | Strengths | Weaknesses |

|---|---|---|

| Cloud-only | Elastic scale, minimal upfront capex | Latency, data-egress cost, less control |

| On-prem heavy (traditional super-rack) | Full control, maximal performance | High facility cost, long deployment lead time |

| Compact super-computer (e.g., DGX Spark) | Balance of scale + proximity + relatively faster deployment | Still requires facility readiness, less elasticity than cloud |

For enterprises placing priority on latency, data locality, and operational control — while still wanting “supercomputer”-level compute — the compact supercomputer model provides a compelling hybrid alternative between cloud and full on-prem racks.

Operational & Organizational Impact

Deploying a system like DGX Spark is not only a hardware decision — it drives organizational change:

- IT and infrastructure collaboration: The compute team, facilities engineering, and AI/ML teams must coordinate closely during rollout and operation.

- Data engineering upgrade: Data pipelines must feed into the new hardware consistently and at scale to justify throughput.

- AI governance and monitoring: As model complexity increases, organizations must implement drift detection, performance tracking, cost monitoring and security auditing.

- Talent and workflow shift: With frontier compute available internally, teams will iterate faster — but they must build workflows that capture, share and scale insights across the enterprise.

In many cases, the real bottleneck for value is not the compute power — it’s aligning the organization and processes to operationalize that compute effectively.

Looking Ahead: What This Launch Signals for the Industry

The release of DGX Spark carries broader implications:

- Compute decentralization: Instead of compute being reserved for centralized cloud giants, we’ll see more modular supercomputing “pods” inside enterprises and regional data centers.

- Shorter innovation cycles: Having supercomputing capability closer to teams means shorter feedback loops — prototypes turn into production faster.

- Edge intelligence becomes feasible: Industries such as manufacturing, oil & gas, and logistics will increasingly deploy real-time AI at the edge, backed by substantial local compute rather than cloud hops.

- Competitive consolidation: Hardware, software and service providers will compete to deliver “supercomputer in a box” experiences — making AI infrastructure more of a turnkey purchase than a custom build.

For enterprise architects and AI leaders, DGX Spark’s launch is a signal: the compute frontier is becoming democratized, and capability is moving closer to the business operation rather than deep within remote cloud silos.

Conclusion

NVIDIA’s DGX Spark marks a meaningful advance in enterprise AI infrastructure. By packing supercomputer-class compute into a more accessible form-factor, the system opens new possibilities for on-premises, edge and hybrid AI strategies. But hardware alone will not will turn models into value — success depends on data readiness, operational maturity and organizational alignment.

Enterprises evaluating this class of infrastructure should treat it as part of a broader enablement strategy: compute, data, governance, talent and workflows. When aligned, they unlock accelerated innovation and differentiated capability. When misaligned, even the most advanced machine sits idle.

Ready to explore how DGX Enterprise AI can help you design and deploy the next-generation AI infrastructure tailored to your organization? Get Started today.